Make measuring impact of training easy

You can finally have access to training evaluation metrics that let you know — in real time and at scale —how and where training is impacting your business beyond training course completion rates and test scores.

Empower your leaders

Give your regional and frontline managers actionable insights that they can translate into meaningful opportunities to coach and boost employee engagement, all while supporting their teams and building new skills and new knowledge.

Tie training program efficacy to business goals

Are your training and development programs hitting the mark when it comes to learning outcomes? It’s time to eliminate the guesswork. Employee training metrics make it clear which programs are driving business impact and influencing the results you care about, and which ones are missing the mark, so you can easily adjust your learning strategy on the fly.

Axonify closes the loop on learning measurement

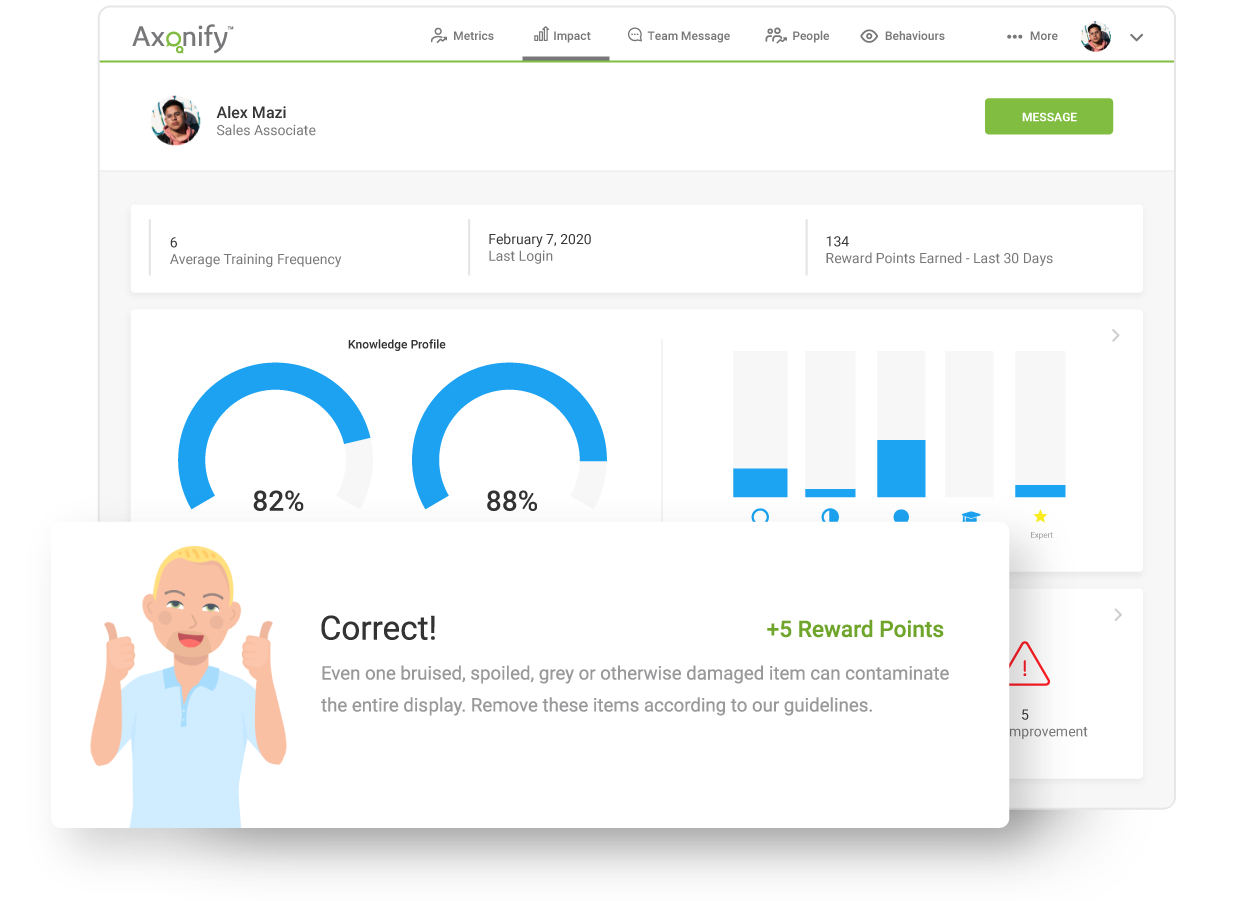

Uncover meaningful coaching opportunities

Your frontline managers have a lot to do, which means they may not get as much one-on-one time to evaluate training with their teams as they want to. With Axonify they can see a complete knowledge profile for every employee, so they can measure training effectiveness and know where to focus their coaching efforts.

Quickly spot your top performers

Completion rates can tell you who’s finished their learning program, but not what actually sticks. Reporting in Axonify helps every frontline manager see the areas of high employee engagement and where their employees are most knowledgeable and confident, allowing them to spot top performers who are ready to mentor others.

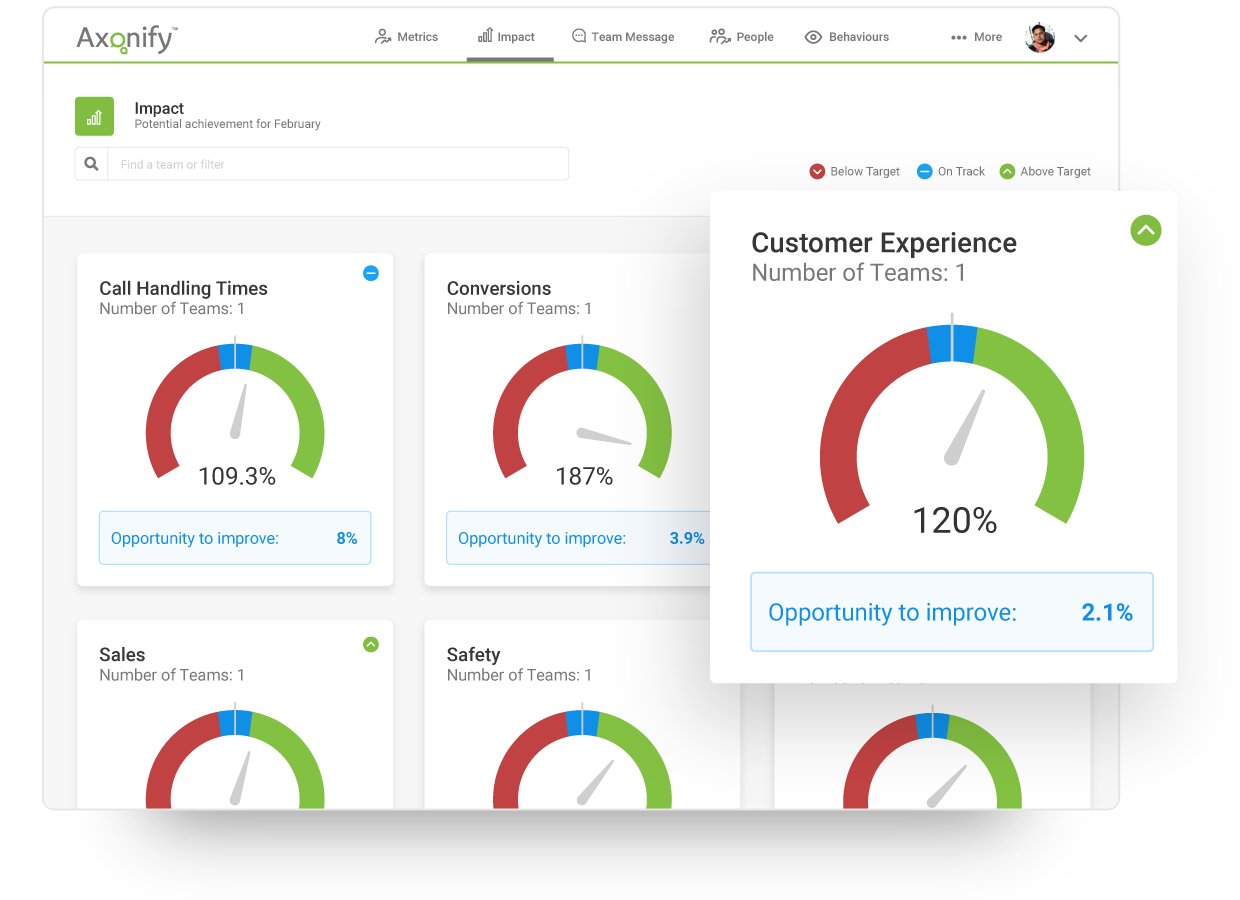

Get real-time insights on the business impact of training

Set individual-, team- or company-level business targets, tag the training program that corresponds to them and let Axonify simplify the training evaluation process for you. Dashboards generate actionable insights on how training is influencing business targets like enhancing employee performance, basket size, CSAT, shrink or call resolution times.